Table of Contents

Grid Node Setup

The grid node is the gateway to and from the Open Science Grid. It hosts the Compute Element (CE), the Storage Element (SE). Since our cluster is relatively small, we can have the grid node host several elements. A larger cluster might need a separation of some of these duties.

The Grid Management Node is not a built-in Rocks appliance. The appliance and kickstart files have to be built and installed on the head node to install grid nodes. The first section of this page describes how are appliance works and how to implement it. If you are following this guide you will need to customize the kickstart file to your hardware. Our kickstart file is customized for our hardware and may not work with other setups.

Create Grid Appliance

Because the grid appliance is not built in you must create a Rocks appliance. Further directions on how to create Rocks appliances can be found here.

grid-appliance.xml

Create the grid appliance link so it will inherit from the login node.

[user@grow-prod ~]$** sudo vi /export/rocks/install/site-profiles/5.4/graphs/default/grid-appliance.xml

You can copy and paste the following lines into the file, or download the file by clicking on the link below and saving it in the location above.</fc>

grid-appliance.xml

grid.xml

Create a grid.xml kickstart file in this location.

[user@grow-prod ~]$ sudo vi /export/rocks/install/site-profiles/5.4/nodes/grid.xml

You can also use our kickstart file by clicking on the link below. Our kickstart file installs xfsprogs package (we need xfs to format the large disk array after installing this node), contains partitioning information, and adds firewall information.

Click here to view our grid.xml file.

To apply the changes above to the current distribution, execute:

[root@grow-prod ~]# cd /export/rocks/install [root@grow-prod install]# sudo rocks create distro

Now we need to add an entry into the Rocks MySQL database. This is accomplished with the rocks command line:

[root@grow-prod install]# rocks add appliance grid membership='Grid' \ node='grid'

With the rpm installation of OSG, edg-mkgridmap needs to have perl-URI-1.35-3.noarch.rpm added to grid.xml

Install Grid Node

Our grid node is a 36 drive storage server. The drives are configured in a RAID 6 configuration. This LVM is mounted on the server as /data which is then network mounted as /share/data.

Click here to view the network information for our grid node.

On the head node do the following:

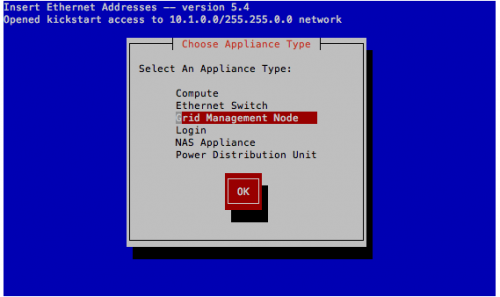

- run insert-ethers

- Select Grid Management Node

- Power up the machine (be sure to boot via the network).

[root@grow-prod ~]# insert-ethers

Disk Partitioning

Choose between manual and auto partitioning. Click here to view the partitioning scheme for our grid nodes.

Configure Grid Node Network Info

While logged into the head node execute the following commands to set up the public information for the grid node:

[root@grow-prod ~]$ rocks set host interface ip grid-0-0 iface=eth1 ip=128.255.88.5 [root@grow-prod ~]$ rocks set host interface name grid-0-0 iface=eth1 name=grow-grid [root@grow-prod ~]$ rocks set host interface subnet grid-0-0 eth1 public [root@grow-prod ~]$ rocks add host route grid-0-0 128.255.88.1 eth1 netmask=255.255.254.0 [root@grow-prod ~]$ rocks sync config [root@grow-prod ~]$ rocks sync host network grid-0-0

Login to the grid node.

[user@grow-prod ~] ssh grid-0-0

Change the GATEWAY variable to equal the public ip address of your head node. Or if the variable is not present, add the variable.

[user@grid-0-0 ~]$ sudo vi /etc/sysconfig/network

- /etc/sysconfig/network

NETWORKING=yes HOSTNAME=grid-0-0.local GATEWAY=128.255.88.1

Add the GATEWAY variable to this file with the public ip address of your head node.

[user@grid-0-0 ~]$ sudo vi /etc/sysconfig/network-scripts/ifcfg-eth1

- "/etc/sysconfig/network-scripts/ifcfg-eth1"

DEVICE=eth1 HWADDR=00:25:90:0b:16:2b IPADDR=128.255.88.50 NETMASK=255.255.254.0 BOOTPROTO=static ONBOOT=yes MTU=1500 GATEWAY=128.255.88.1

Now execute the following command to reset the network settings.

[root@grid-0-0 ~]# service network restart

Install XFS, Format and Mount the Large Array

- Install xfs

- Create file system. (The RAID 6 is sdc)

- Make /data directory if not present.

- Mount the sdc device on /data

[root@grid-0-0 ~]# yum install kmod-xfs xfsdump xfsprogs [root@grid-0-0 ~]# mkfs.xfs /dev/sdc [root@grid-0-0 ~]# mkdir /data [root@grid-0-0 ~]# mount -t xfs /dev/sdc /data

Change the fstab to mount the new filesystem automatically when booting.

[root@grid-0-0 ~]# sudo vi /etc/fstab

Add the line:

- "/etc/fstab"

/dev/sdc /data xfs defaults 1 1

Check if device is mounted correctly.

[root@grid-0-0 ~]# mount

/dev/md0 on / type ext3 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

/dev/md3 on /export type ext3 (rw)

/dev/md1 on /var type ext3 (rw)

tmpfs on /dev/shm type tmpfs (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

nfsd on /proc/fs/nfsd type nfsd (rw)

/dev/sdc on /data type xfs (rw)

grow-prod.local:/export/home/osg on /home/osg type nfs (rw,addr=10.1.1.1)

grow-prod.local:/export/home/cmssoft on /home/cmssoft type nfs (rw,addr=10.1.1.1)

grow-prod.local:/export/home/dsquires on /home/dsquires type nfs (rw,addr=10.1.1.1)

Check the volume for the correct size.

[root@grid-0-0 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/md0 16G 6.2G 8.6G 42% /

/dev/md3 421G 163G 237G 41% /export

/dev/md1 7.8G 856M 6.6G 12% /var

tmpfs 24G 0 24G 0% /dev/shm

/dev/sdc 55T 6.2T 49T 12% /data

grow-prod.local:/export/home/osg

421G 217G 182G 55% /home/osg

grow-prod.local:/export/home/cmssoft

421G 217G 182G 55% /home/cmssoft

grow-prod.local:/export/home/dsquires

421G 217G 182G 55% /home/dsquires

Execute the following commands to start and ensure nfs will start during boots:

[root@grid-0-0 ~]# chkconfig nfs on [root@grid-0-0 ~]# service nfs start

Login to the head node and execute the following:

[root@grow-prod ~]# vi /etc/auto.shareAdd the following line

- "/etc/auto.share"

data grid-0-0.local:/data

- Update the 411 files.

- Restart nfs.

- Restart autofs on all nodes.

[root@grow-prod ~]# cd /var/411; make [root@grow-prod ~]# service nfs restart [root@grow-prod ~]# rocks run host login 'service autofs restart'

Make Data directories

This directory is for the users login accounts.

[root@grid-0-0 data]# mkdir users

These directories will be used for grid operations. The -p will create the parent directories if they do not exist.

[root@grid-0-0 data]# mkdir -p /share/data/se/store/user/

Enable Web Access

We need to enable web access for the grid node.

Notes

Contact Info

This Dokuwiki page is maintained by:

Daniel Squires

University of Iowa

Department of Computer Science

Email: daniel-squires@uiowa.edu